概要

お悩み

(修正)話した内容をUnity上でテキスト表示するにはどうしたらいいの?

この記事でわかること

・Unity上で録音する方法

・Whisper(Open AI)を用いて文字起こしする方法

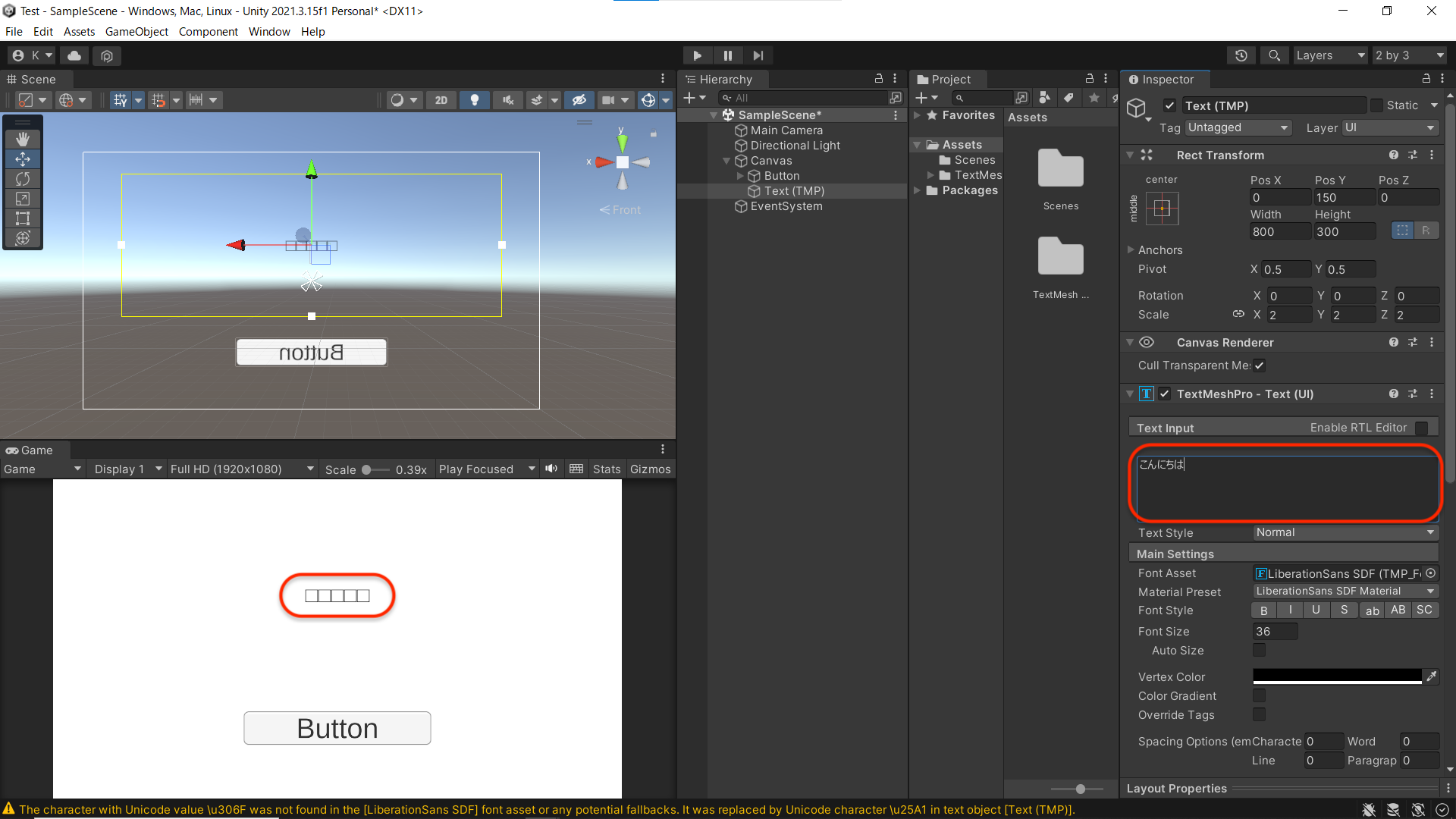

・文字起こししたテキストをUnity上に表示する方法

手順

設定

① Whisperの設定

WhisperとはOpen AI 社が提供するSpeech to Textツールです.(説明修正)

//「参照:Whisper とは? > iPPO」

今回の実装では,Whisperを活用して録音した音声の文字起こしを行います.

Whisper API を利用するには Open AI の API Key が必要になるので,まずはAPI Kye の取得から始めます.

APIの利用には課金が必要なので注意が必要です.

APIの具体的な取得方法はこちらをご覧ください.

「参照:OpenAI APIの入手方法(ChatGPT・Whisper) > iPPO」

API Kyeを取得できればUnityの初期設定に入っていきます.

② Unityの初期設定

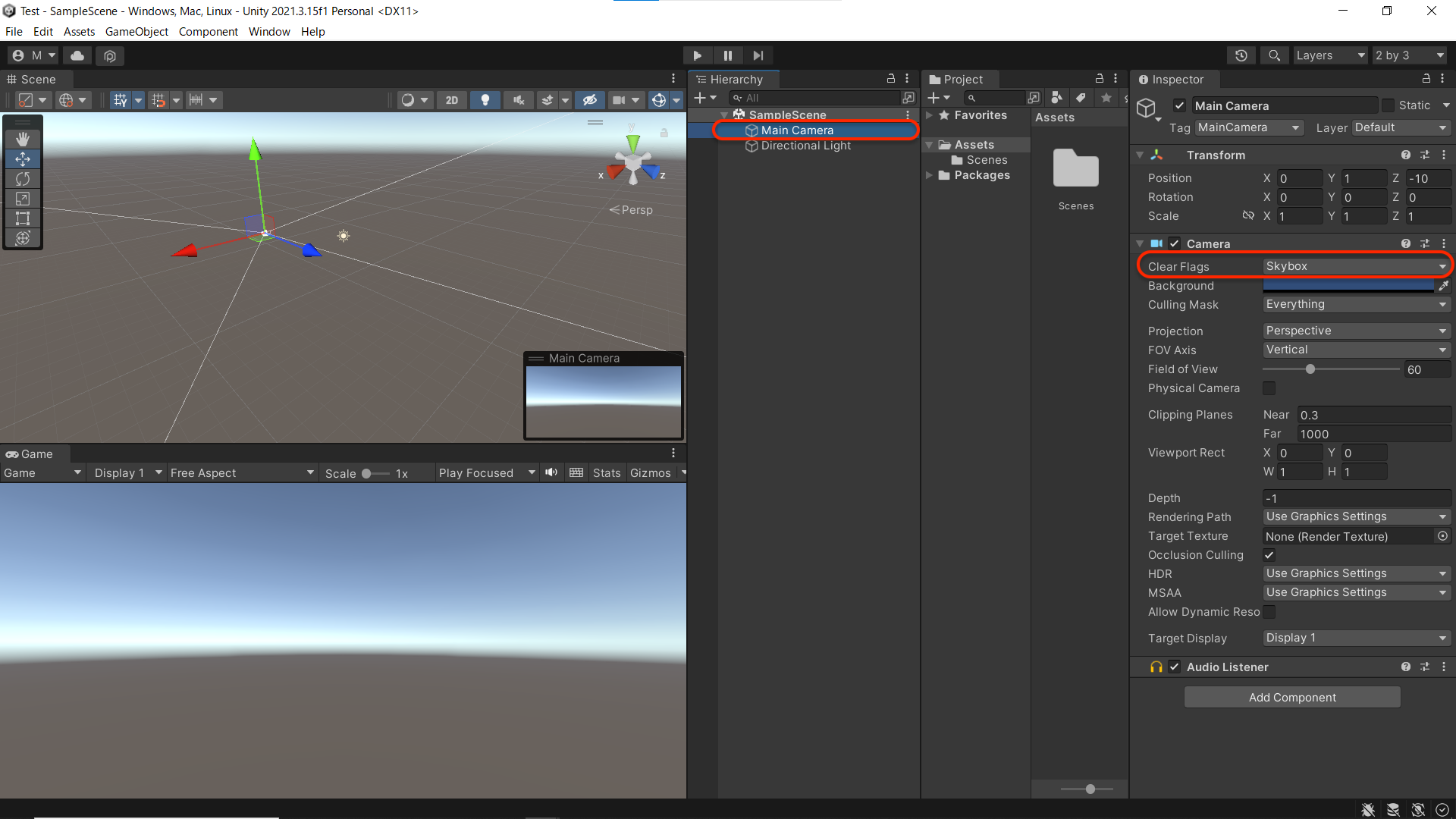

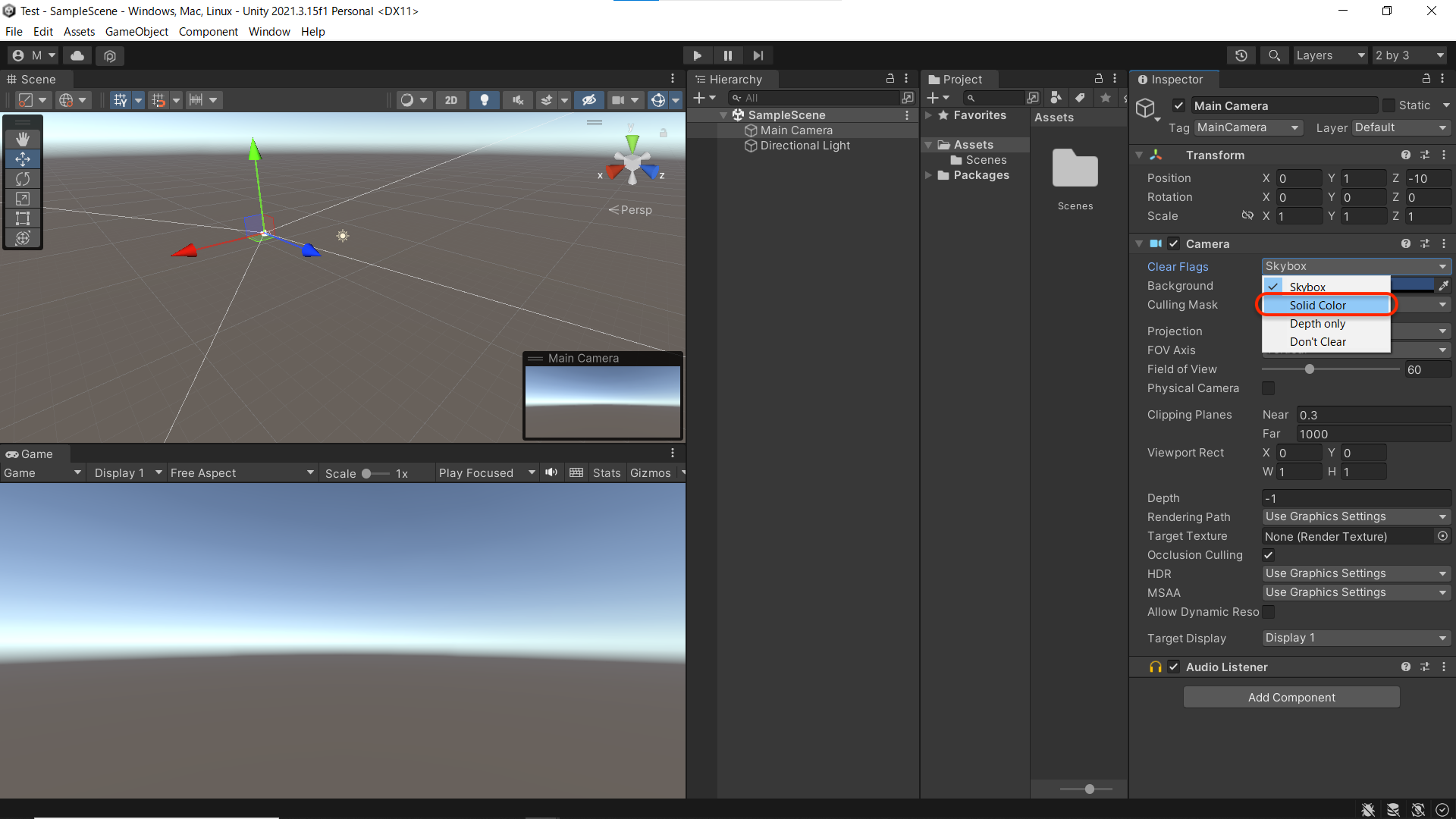

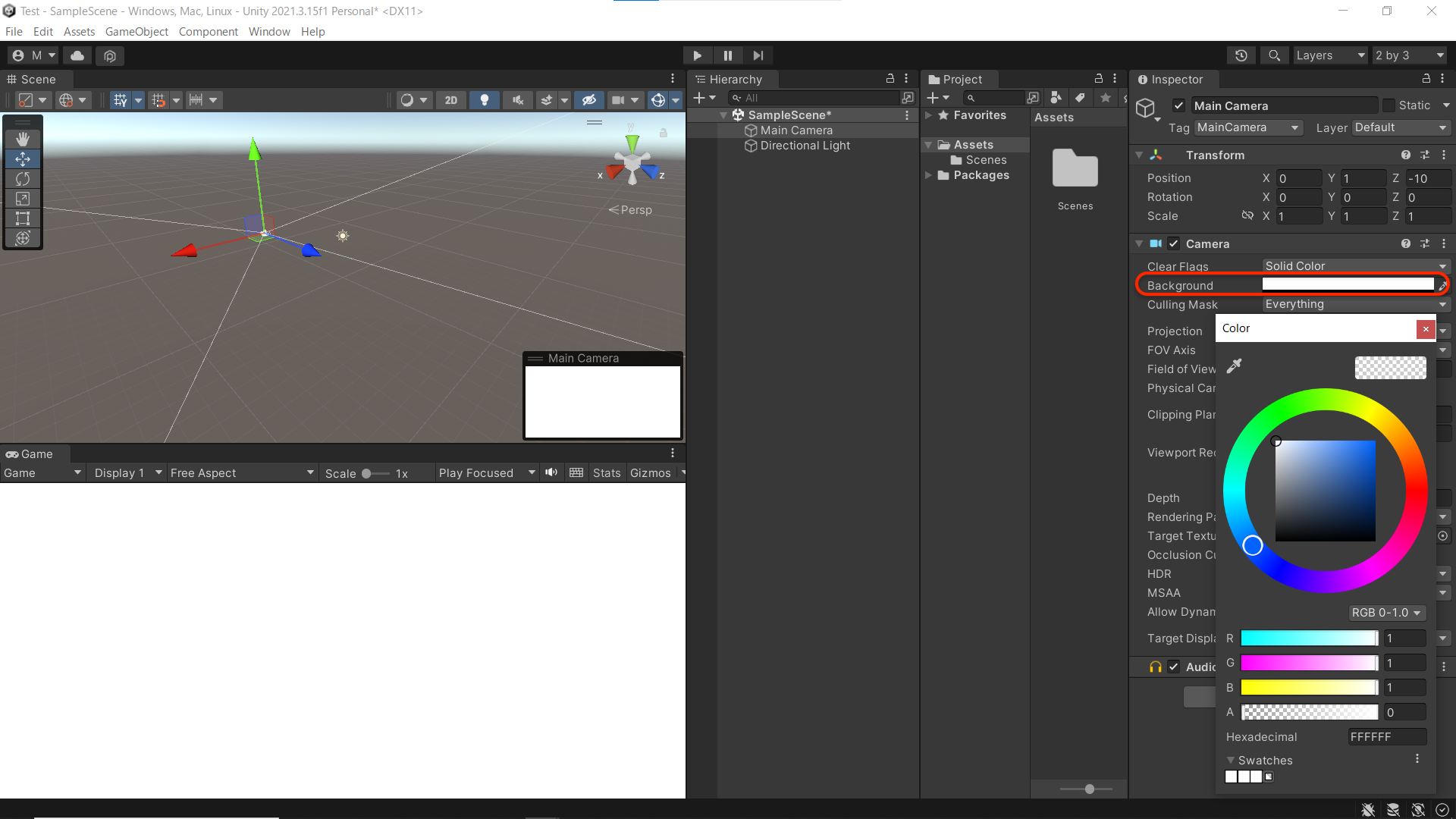

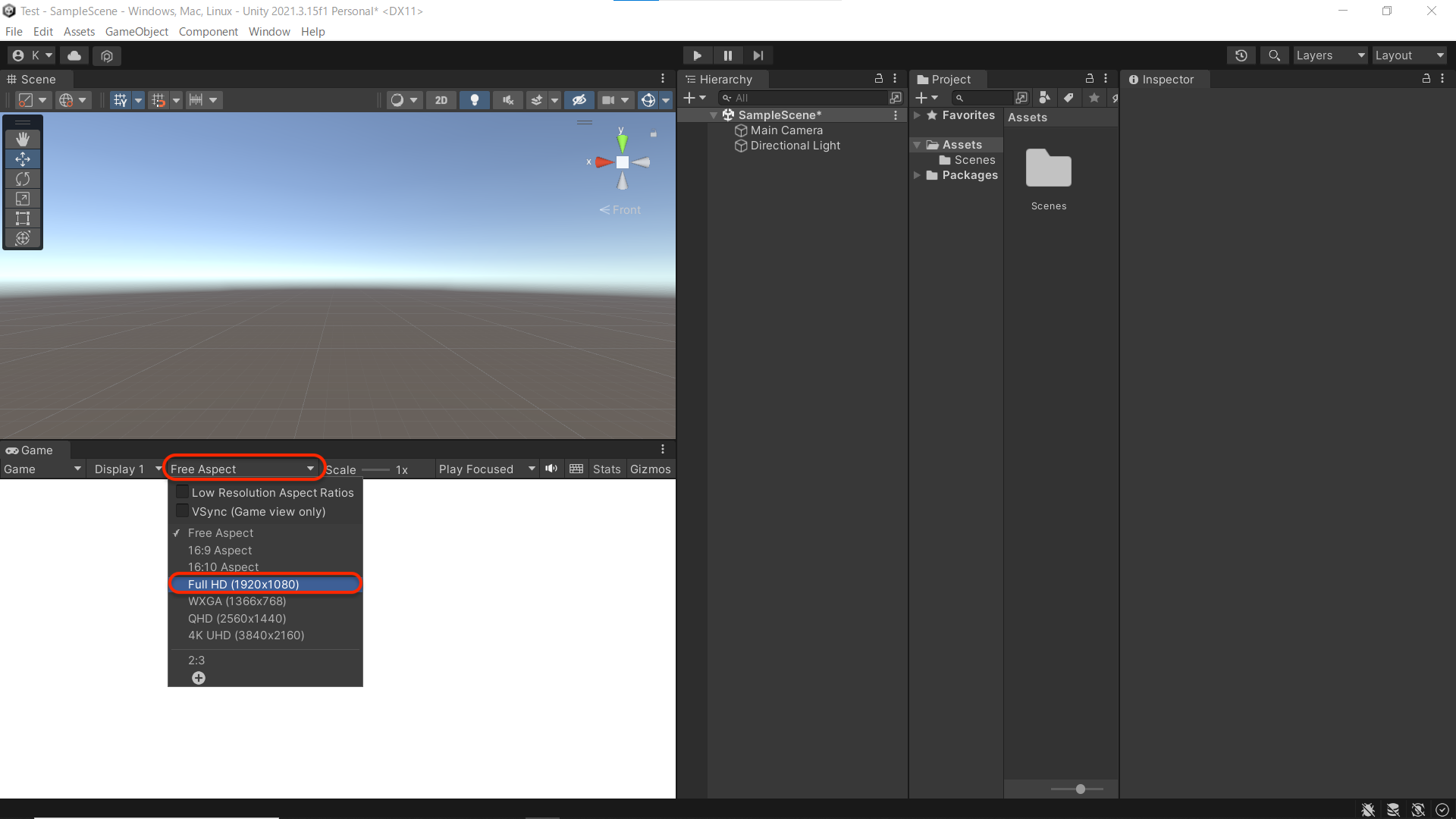

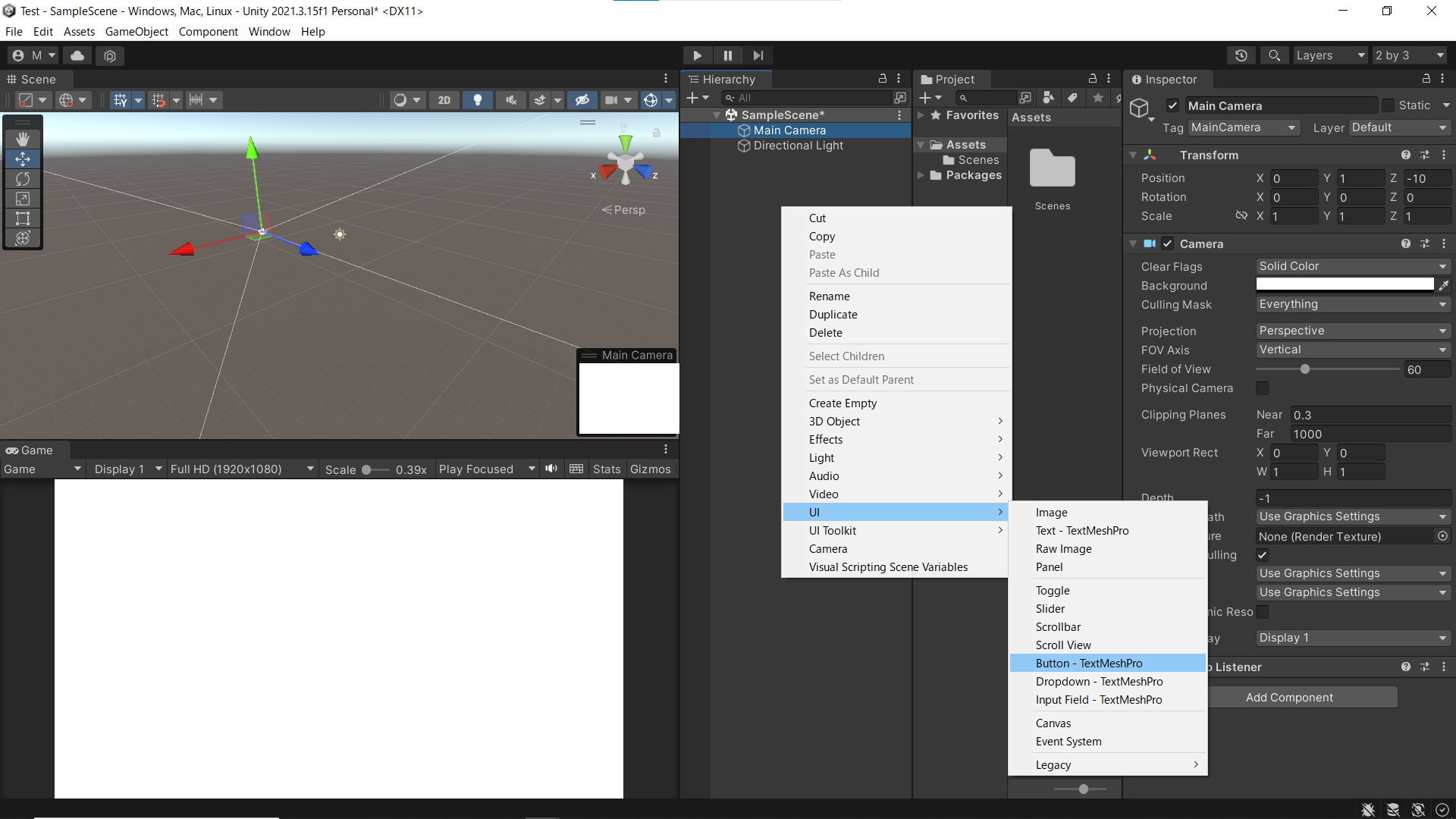

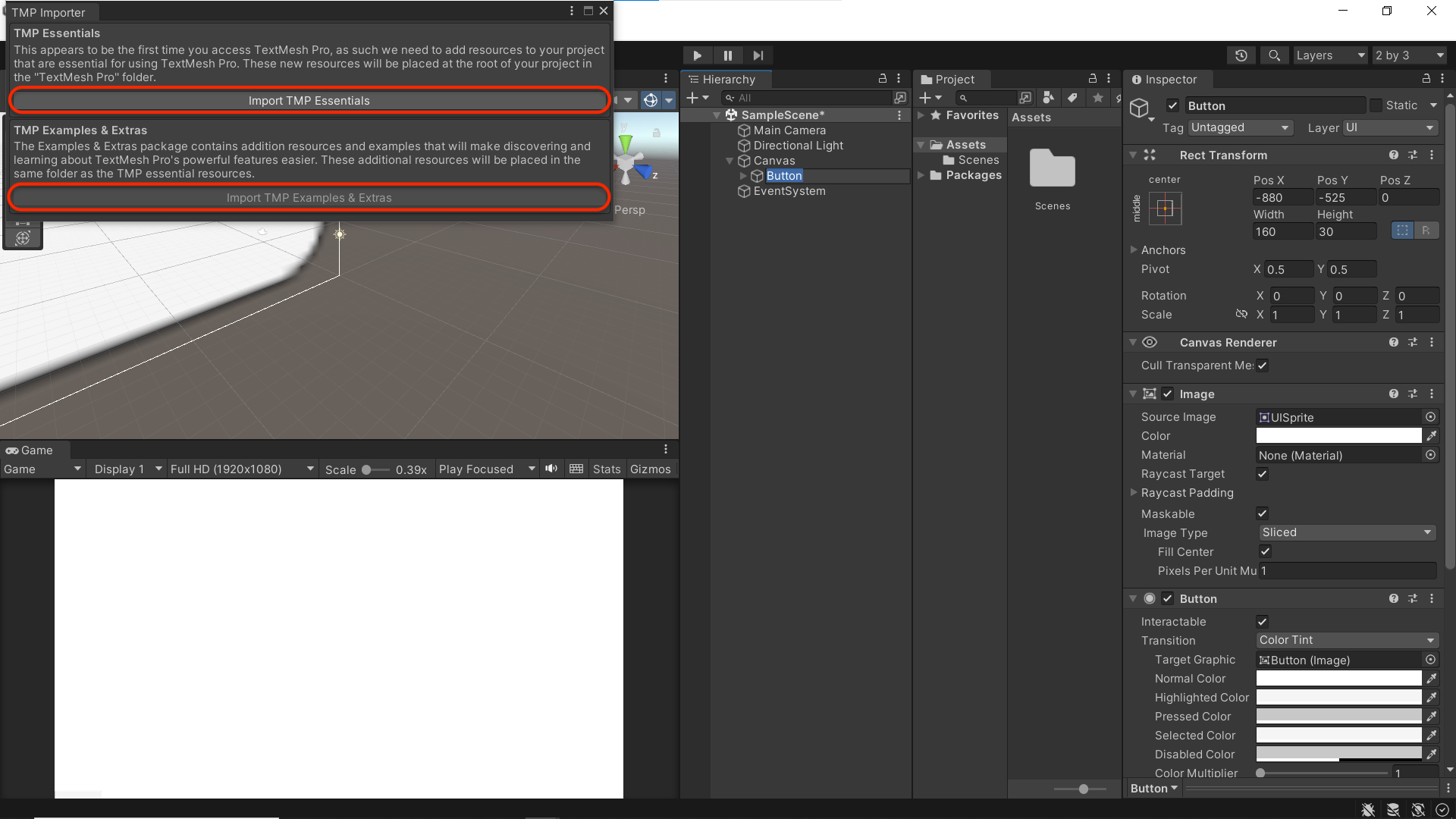

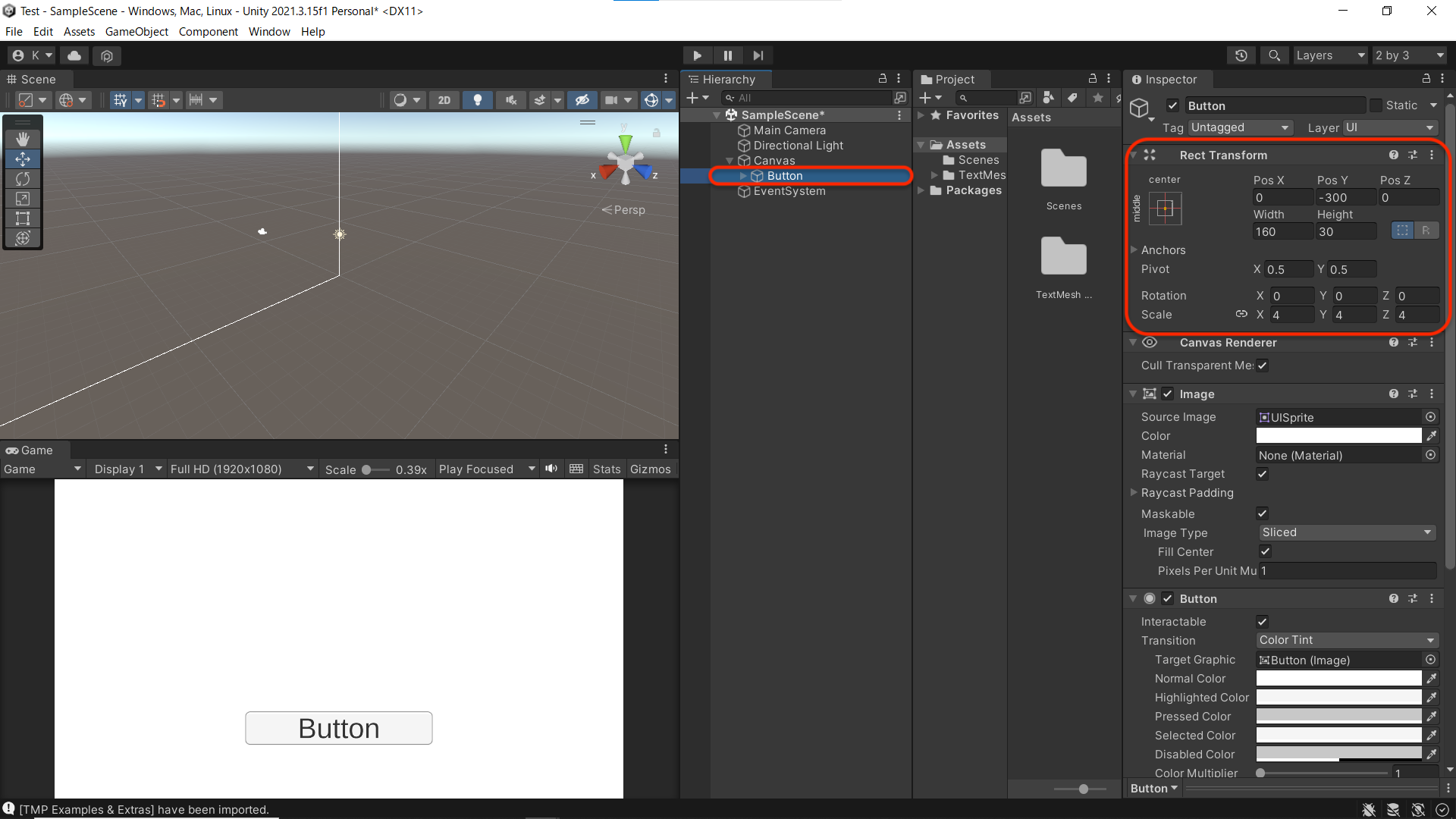

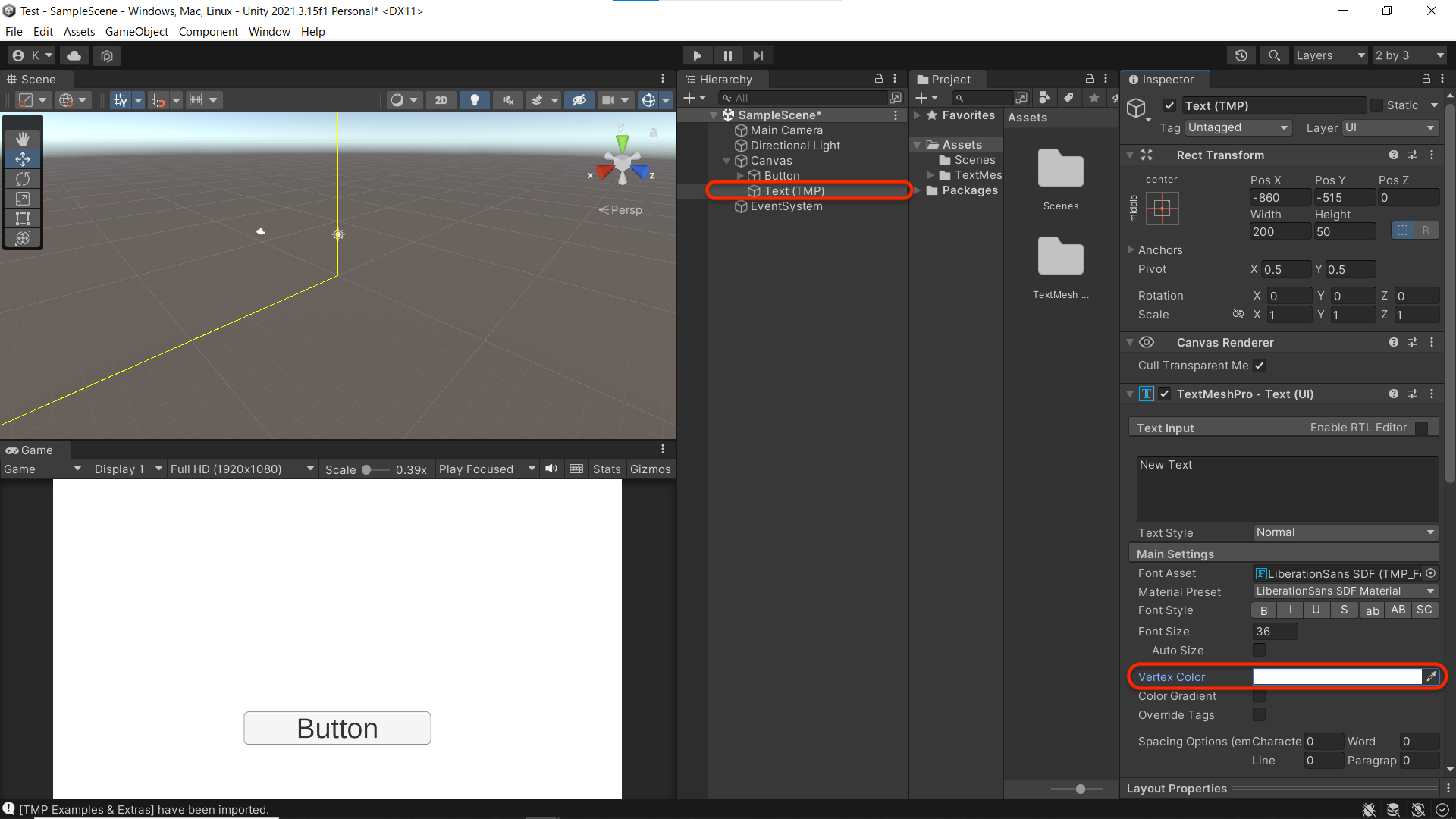

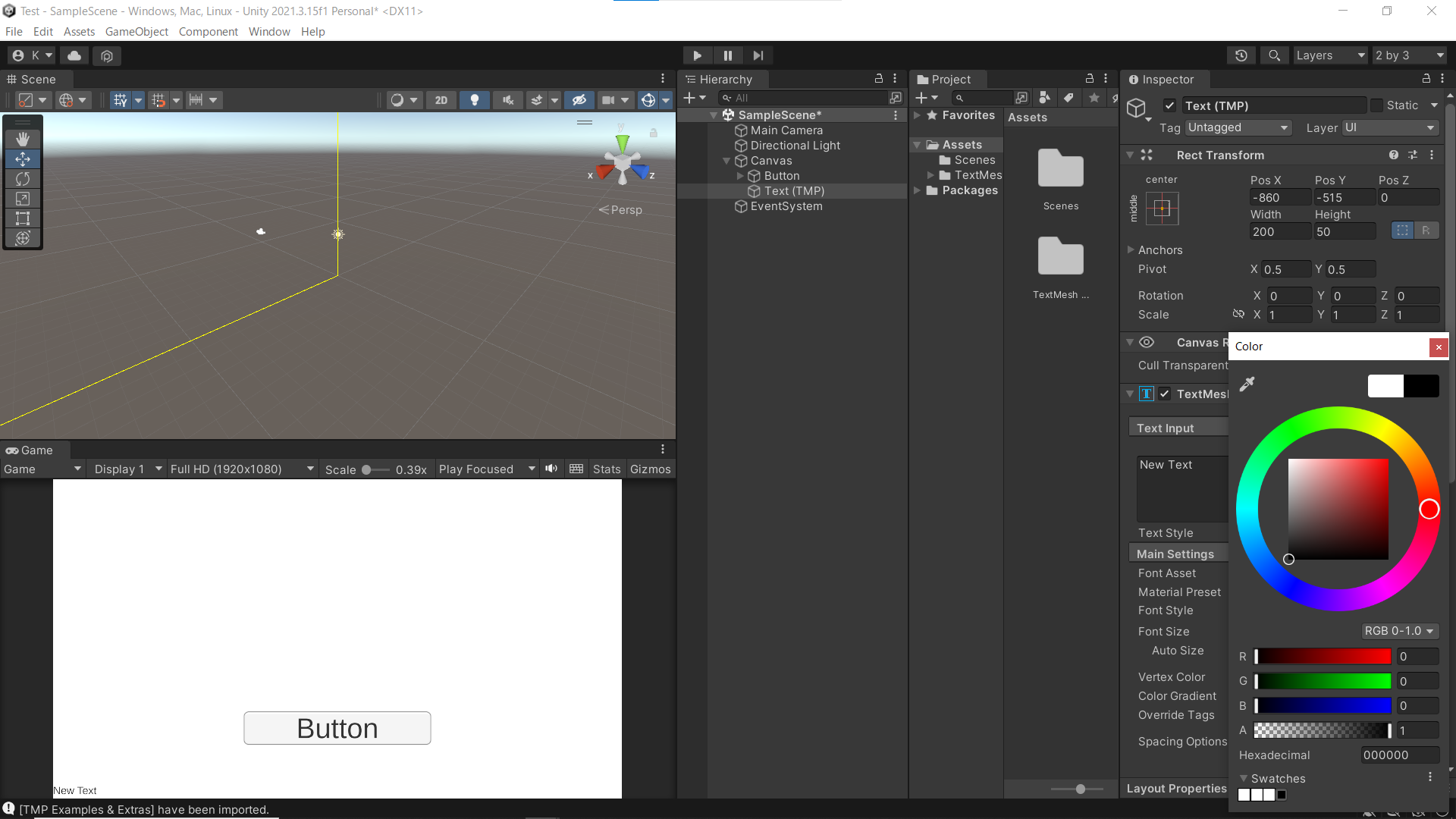

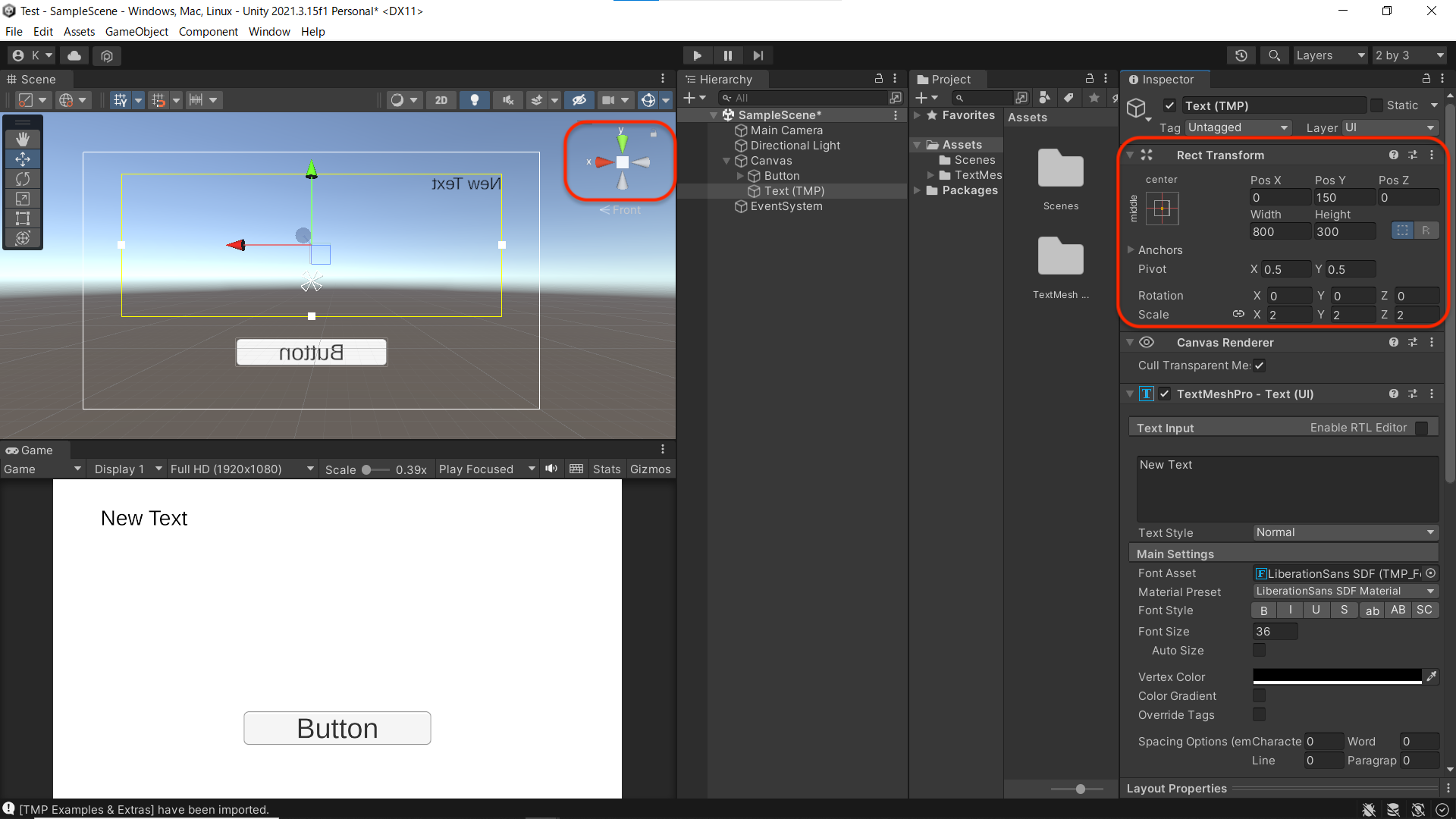

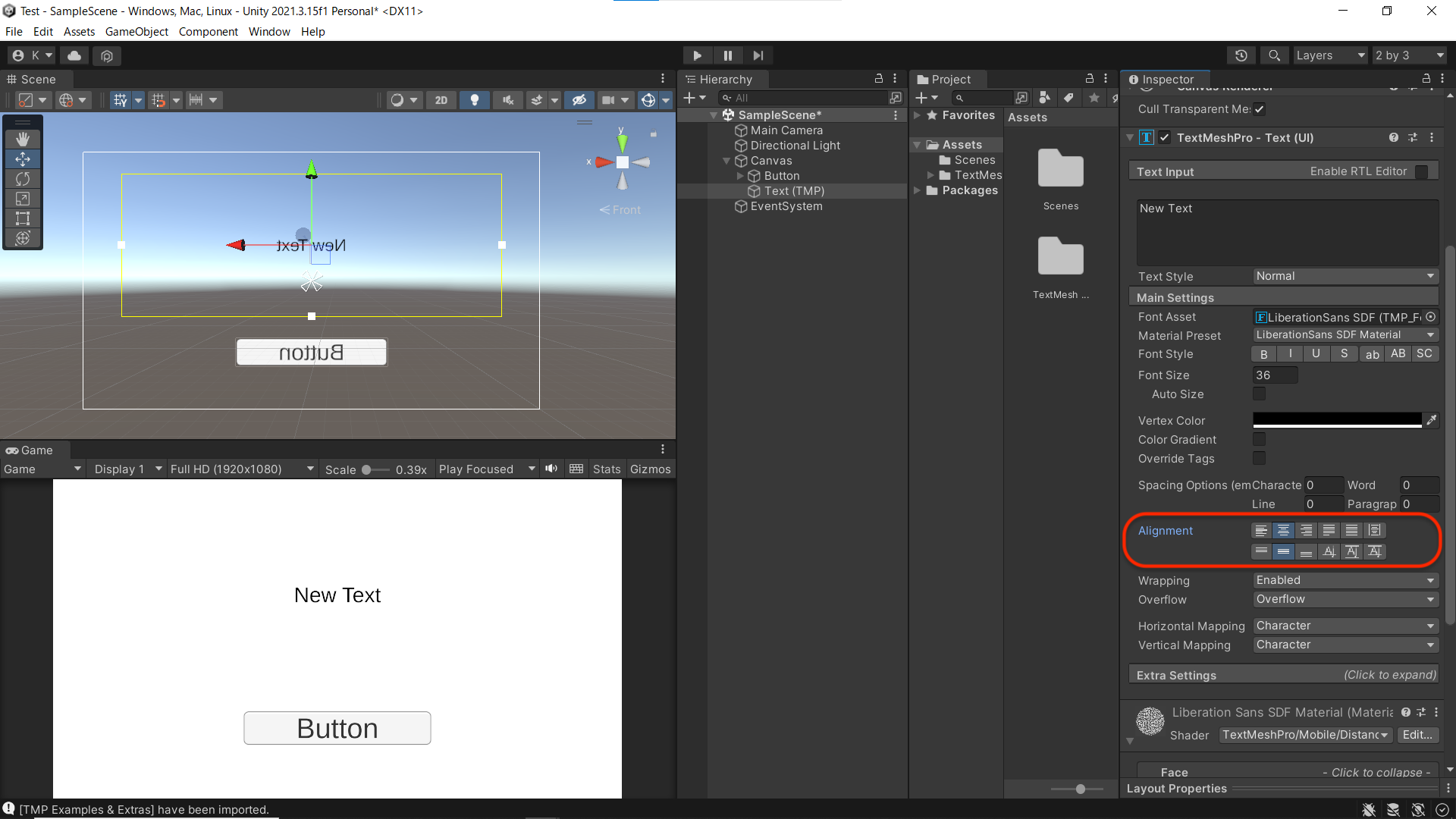

Unityの初期設定では,UIの設定を行います.

・背景・ディスプレイサイズの変更

・ボタン・文字の配置

実装

① Whisperの設定

② Unity ソースコード

// SpeechToText.cs

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

using UnityEngine.EventSystems;

using UnityEngine.Networking;

using System;

using System.IO;

using System.Text;

using TMPro;

public class SpeechToText : MonoBehaviour, IPointerClickHandler

{

bool flagMicRecordStart = false;

bool catchedMicDevice = false;

string currentRecordingMicDeviceName = "null";

string recordingTargetMicDeviceName = "MIC_NAME"; // マイクの名前を選択

int HeaderByteSize = 44;

int BitsPerSample = 16;

int AudioFormat = 1;

AudioClip recordedAudioClip;

int samplingFrequency = 44100;

int maxTimeSeconds = 10; // 最大録音時間[sec]

byte[] dataWav;

string OpenAIAPIKey = "YOUR_API_KEY"; // OpenAIのAPIキーを設定

public TextMeshProUGUI textDisplay; // TextMeshProUGUIコンポーネントへの参照

void Start()

{

catchedMicDevice = false;

Launch();

}

void Launch()

{

foreach (string device in Microphone.devices)

{

Debug.Log($"Mic device name : {device}");

if (device == recordingTargetMicDeviceName)

{

Debug.Log($"{recordingTargetMicDeviceName} searched");

currentRecordingMicDeviceName = device;

catchedMicDevice = true;

}

}

if (catchedMicDevice)

{

Debug.Log($"Microphone search succeeded");

Debug.Log($"currentRecordingMicDeviceName : {currentRecordingMicDeviceName}");

}

else

{

Debug.Log($"Microphone search failed");

}

}

void Update()

{

}

void RecordStart()

{

recordedAudioClip = Microphone.Start(currentRecordingMicDeviceName, false, maxTimeSeconds, samplingFrequency);

}

void RecordStop()

{

Microphone.End(currentRecordingMicDeviceName);

Debug.Log($"WAV creation started");

using (MemoryStream currentMemoryStream = new MemoryStream())

{

byte[] bufRIFF = Encoding.ASCII.GetBytes("RIFF");

currentMemoryStream.Write(bufRIFF, 0, bufRIFF.Length);

byte[] bufChunkSize = BitConverter.GetBytes((UInt32)(HeaderByteSize + recordedAudioClip.samples * recordedAudioClip.channels * BitsPerSample / 8));

currentMemoryStream.Write(bufChunkSize, 0, bufChunkSize.Length);

byte[] bufFormatWAVE = Encoding.ASCII.GetBytes("WAVE");

currentMemoryStream.Write(bufFormatWAVE, 0, bufFormatWAVE.Length);

byte[] bufSubchunk1ID = Encoding.ASCII.GetBytes("fmt ");

currentMemoryStream.Write(bufSubchunk1ID, 0, bufSubchunk1ID.Length);

byte[] bufSubchunk1Size = BitConverter.GetBytes((UInt32)16);

currentMemoryStream.Write(bufSubchunk1Size, 0, bufSubchunk1Size.Length);

byte[] bufAudioFormat = BitConverter.GetBytes((UInt16)AudioFormat);

currentMemoryStream.Write(bufAudioFormat, 0, bufAudioFormat.Length);

byte[] bufNumChannels = BitConverter.GetBytes((UInt16)recordedAudioClip.channels);

currentMemoryStream.Write(bufNumChannels, 0, bufNumChannels.Length);

byte[] bufSampleRate = BitConverter.GetBytes((UInt32)recordedAudioClip.frequency);

currentMemoryStream.Write(bufSampleRate, 0, bufSampleRate.Length);

byte[] bufByteRate = BitConverter.GetBytes((UInt32)(recordedAudioClip.samples * recordedAudioClip.channels * BitsPerSample / 8));

currentMemoryStream.Write(bufByteRate, 0, bufByteRate.Length);

byte[] bufBlockAlign = BitConverter.GetBytes((UInt16)(recordedAudioClip.channels * BitsPerSample / 8));

currentMemoryStream.Write(bufBlockAlign, 0, bufBlockAlign.Length);

byte[] bufBitsPerSample = BitConverter.GetBytes((UInt16)BitsPerSample);

currentMemoryStream.Write(bufBitsPerSample, 0, bufBitsPerSample.Length);

byte[] bufSubchunk2ID = Encoding.ASCII.GetBytes("data");

currentMemoryStream.Write(bufSubchunk2ID, 0, bufSubchunk2ID.Length);

byte[] bufSubchuk2Size = BitConverter.GetBytes((UInt32)(recordedAudioClip.samples * recordedAudioClip.channels * BitsPerSample / 8));

currentMemoryStream.Write(bufSubchuk2Size, 0, bufSubchuk2Size.Length);

float[] floatData = new float[recordedAudioClip.samples * recordedAudioClip.channels];

recordedAudioClip.GetData(floatData, 0);

foreach (float f in floatData)

{

byte[] bufData = BitConverter.GetBytes((short)(f * short.MaxValue));

currentMemoryStream.Write(bufData, 0, bufData.Length);

}

Debug.Log($"WAV creation completed");

dataWav = currentMemoryStream.ToArray();

Debug.Log($"dataWav.Length {dataWav.Length}");

StartCoroutine(PostAPI());

}

}

public void OnPointerClick(PointerEventData eventData)

{

if (catchedMicDevice)

{

if (flagMicRecordStart)

{

flagMicRecordStart = false;

Debug.Log($"Mic Record Stop");

RecordStop();

}

else

{

flagMicRecordStart = true;

Debug.Log($"Mic Record Start");

RecordStart();

}

}

}

IEnumerator PostAPI()

{

List<IMultipartFormSection> formData = new List<IMultipartFormSection>();

formData.Add(new MultipartFormDataSection("model", "whisper-1"));

formData.Add(new MultipartFormFileSection("file", dataWav, "whisper01.wav", "multipart/form-data"));

string urlWhisperAPI = "https://api.openai.com/v1/audio/transcriptions";

UnityWebRequest request = UnityWebRequest.Post(urlWhisperAPI, formData);

request.SetRequestHeader("Authorization", $"Bearer {OpenAIAPIKey}");

request.downloadHandler = new DownloadHandlerBuffer();

Debug.Log("Start Request");

yield return request.SendWebRequest();

switch (request.result)

{

case UnityWebRequest.Result.InProgress:

Debug.Log("Request in progress");

break;

case UnityWebRequest.Result.ProtocolError:

Debug.Log("ProtocolError");

Debug.Log(request.responseCode);

Debug.Log(request.error);

break;

case UnityWebRequest.Result.ConnectionError:

Debug.Log("ConnectionError");

break;

case UnityWebRequest.Result.Success:

Debug.Log("Request Succeeded");

Debug.Log($"responseData: {request.downloadHandler.text}");

// responseDataを解析してテキストデータを表示

string responseText = request.downloadHandler.text;

string searchText = "\"text\":\"";

int startIndex = responseText.IndexOf(searchText);

if (startIndex != -1)

{

startIndex += searchText.Length;

int endIndex = responseText.IndexOf("\"", startIndex);

if (endIndex != -1)

{

string spokenText = responseText.Substring(startIndex, endIndex - startIndex);

textDisplay.text = spokenText;

Debug.Log($"Spoken Text: {spokenText}");

}

}

break;

}

}

}// responseDataを解析してテキストデータを表示

string responseText = request.downloadHandler.text;

string searchText = "\"text\":\"";

int startIndex = responseText.IndexOf(searchText);

if (startIndex != -1)

{

startIndex += searchText.Length;

int endIndex = responseText.IndexOf("\"", startIndex);

if (endIndex != -1)

{

string spokenText = responseText.Substring(startIndex, endIndex - startIndex);

textDisplay.text = spokenText;

Debug.Log($"Spoken Text: {spokenText}");

}

}